It 'AI'-n't Necessarily So!

The Blind Squirrel's Monday Morning Notes, 10th June 2024.

This week, we are drawn back to the AI debate. The 🐿️ would much rather that AI took the form of Scarlett Johansson whispering (good!) email inbox management advice in his ear than be dealing with the scary sword lady AI bot from Netflix’s 3 Body Problem!

This does mean that we have to break our promise of not discussing ‘The Highlander™’, our nickname for Jensen Huang’s Nvidia that has frustratingly not yet gone viral.

We contemplate the width of the chip maker’s ‘moat’ and the risk of malinvestment among the hypers-scalers.

For now, picks and shovels to the AI gold rush are selling briskly, but this is no 19th Century California. Some nuggets are going to need to come out of the riverbed pretty soon or there is going to be a Metaverse-style investor rebellion and capex budgets will need to be curtailed rapidly. The froth of hype and excitement could recede as rapidly as it emerged in late 2022.

In Section 2 this week (for paid subs), we wonder whether or not the ‘go woke, go broke’ crew are going to lose their shirts trading their politics (again)! Are they making the same mistake in writing off Mexico, one of the best performing emerging markets of recent years, “because they have elected a communist as Presidenta”?

Welcome! I'm Rupert Mitchell and this is my weekly newsletter on markets and investment ideas. While much of this letter is free (and I will never cut off any punchlines), please consider becoming a paid subscriber to receive the other 60% of the content and plenty of other good stuff!

It ‘AI’-n't Necessarily So!

The note this week starts with an apology. I am breaking my promise of trying to avoid writing about Nvidia. To be honest, I am still pretty bitter that the 🐿️’s Magnificent Seven transition to The Highlander™ (‘There can be only one!’) analogy never caught on and went viral.

In all honesty, this rodent has a mixed history with his calls on the stock. But I have learned my lesson over time! After a profitable period in 2022 on the short side of semiconductors, an expensive and short-lived attempt to fade the sector’s ‘AI frenzy’ in early 2023 has had me largely in ‘spectator mode’ since.

Fortunately, compliance reasons also prevent me from any foolhardy attempts to specifically fade Nvidia’s extraordinary move over the past 18 months.

I was safely on the sidelines by the time Jensen turned on the after burners with his jumbo beat and guide in May of 2023.

In the meantime, we have sought to gatecrash the party via FOMO hedges and ‘cheaper’ AI derivative plays. In February we looked at non-GPU data center capex in our ‘Seeing the light’ piece on coherent optics and then turned our thoughts turned to the opportunity created by the use of LLMs in the biotech sector (‘A(i) Prescription’).

Then in March, in Who dares to fade the 'Highlander Effect'? we were reticent to join those in the commentariat that had been desperate to call time on the stock’s stellar run (and with it the US equity bull market) just as Nvidia was about to launch ‘Grace’ at its developer conference. Our view was reinforced by ARK Investment’s Cathie Wood was at the same time calling for a correction in semi stocks (having sold her own NVDA 0.00%↑ position at the 2022 lows)!

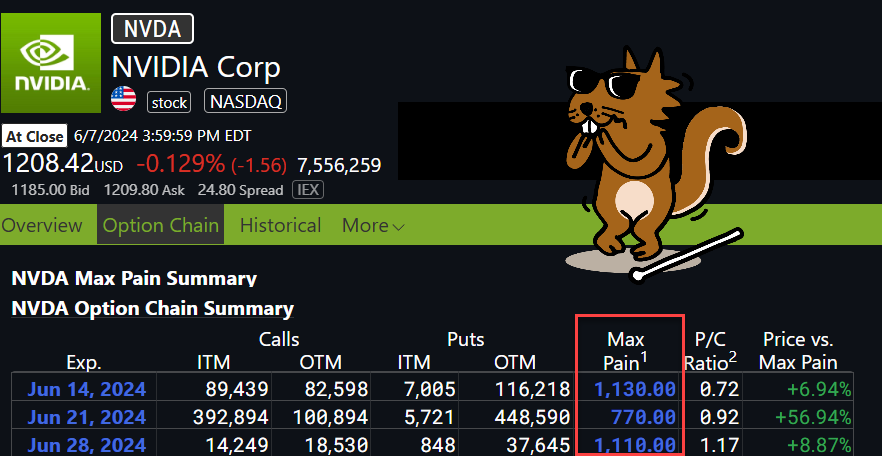

The final rationale for concluding that “I suspect that we can wait for a bit of time for the ‘Hammer to Fall’ on our swordsman, Jensen Huang” was that we were yet to see the company announce a stock split! Well, fresh off the back of last week’s boob-signature-gate we have a 10:1 stock split scheduled for tonight! Is this this the bell ring moment we needed to see?

My pal Le Shrub has done some great work on the precedents of prior stock split feeding frenzies. For me, there is a risk that a ‘top’ here is too obvious. The failed ‘Roaring Kitty’ pump of Gamestop last Friday may have shrunk retail appetite for soon-to-come ‘smaller ticket’ (a few hundred dollars versus a few thousand) Nvidia out-of-the-money call options but some gamma squeeze craziness cannot be ruled out.

We have some small ‘skin in the game’ via our September put on the SOXX 0.00%↑ semiconductor ETF which we ‘earned for free’ via rolling up a series of April calls (our FOMO hedge). Otherwise, I am content to be in the cheap seats, observing with a big box of popcorn!

I think the bigger deal for Nvidia is its role in what I am starting to see as the heightened risk of an AI malinvestment boom. The company’s revenue growth in the past 18 months is beyond spectacular.

Sell-side research has reacted in the only way it knows how to. With linear extrapolation!

They also continue to see no letup in the gross margins that Jensen should be able to hold on to until [*checks notes] 2027! These forecasts have aroused the interest of the US antitrust cops (for whatever that is worth!).

But just how wide is that moat? Huang’s fellow South Taiwan native AMD CEO Lisa Su reckons that her AI roadmap will be competitive with Nvidia. I found out this weekend that Jensen and Lisa are actually cousins! However, regular industry competition is not Jensen’s biggest threat. His biggest customers, the cloud hyper-scalers (Amazon, Alphabet and Microsoft), are seeking to design him out of their data centers with their own chips.

With profound apologies to Ira Gershwin, the Porgy and Bess show tune may see Jensen’s (David) to cause the big tech giants (Goliath) to “lay down and dieth” but “it AI-n’t necessarily so”! (I hope the reference to Jonah’s whale in the cover art this week is not too obscure - I just love the scansion of “he made his home in dat fish's abdomen”.)

The cost of building advanced AI data centers is already eye watering but if 49% of the bill of material component of the capex represents Nvidia’s gross margin on H100 GPU chips, Goliath AI-n’t laying down for long!

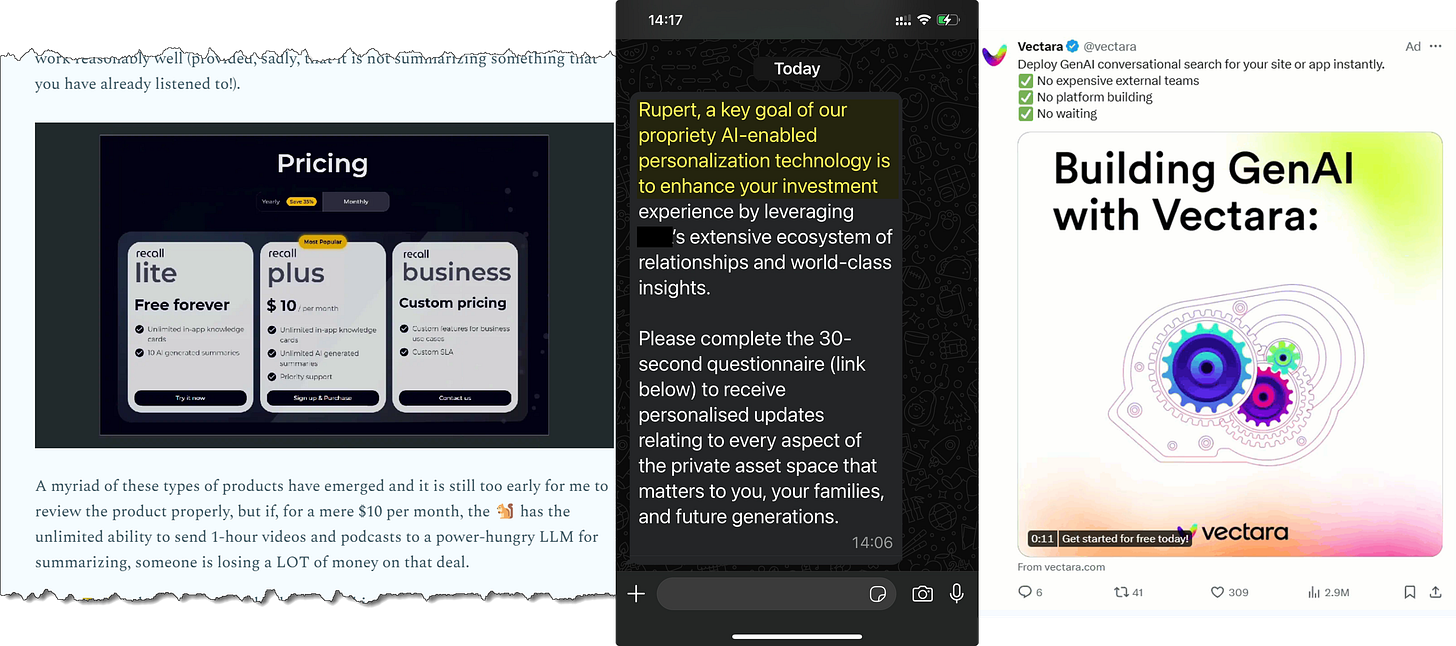

As of now, the hyper-scalers (and their shareholders) are ‘all-in’ when it comes to this capex runway. We are in the ‘build it and they will come’ phase of the journey. Last week, the 🐿️ was riffing on the proliferation of AI-enabled ‘B2B’ and ‘B2C’ applications currently being offered to us at prices that would appear to be highly subsidized. I cited my new GetRecall.ai podcast summarizer as an example.

On Friday, I was texted an invitation by a local wealth manager to complete a survey that would lead to ‘AI-enabled investment recommendations’. Strewth! (Although I doubt they paid that much for a survey product). My Twitter feed is being constantly bombarded with ads trying to sell GenAI plug-ins for websites and apps, all of them offering free or very low-cost access to LLMs.

This Vectara product offers model access that is “Free up to 15,000 queries, 15,000 generative requests per month, and 50 MB account size. 20,000 pages of text (enough to load the entire Harry Potter series 5x). Starting at $0/month.” The consumer or enterprise AI ‘killer app’ would still appear to elude the market. Neat gimmicks, but where is the return on all this investment going to come from?

For now, picks and shovels to the AI gold rush are selling briskly, but this is no 19th Century California. Some nuggets are going to need to come out of the riverbed pretty soon or there is going to be a Metaverse-style investor rebellion and capex budgets will need to be curtailed rapidly. The froth of hype and excitement could recede as rapidly as it emerged in late 2022.

Surely the 🐿️ is missing something? I devoted a big chunk of this weekend to reading Leopold Aschenbrenner’s June 2024 paper on Artificial General Intelligence (‘AGI’), Situational Awareness. The paper clearly has an agenda. Leopold is an AI evangelist and one of the (definitely(!) non-Sam Altman aligned) rebels no longer on the ‘Superalignment’ team at OpenAI.

I am prepared to take Leopold’s forecasts about the pace and scope of AI potential development at face value. Frankly the list of folks with the knowledge and ability to interrogate these statements intelligently is probably pretty small!

I have read much about AI’s LLMs starting to run out of internet data to scrape, the so-called ‘data wall’. The use of synthetic data (i.e. training AI models on AI-generated content) sounds like a high-risk strategy that could lead to "model collapse" and compound existing biases and errors within the LLMs.

Leopold suggests that a process akin to training of AlphaGo might work:

“In step 2, AlphaGo played millions of games against itself. This let it become superhuman at Go: remember the famous move 37 in the game against Lee Sedol, an extremely unusual but brilliant move a human would never have played.”

Notwithstanding the bluster coming from the Silicon Valley startups, this still feels like a major hurdle for AI development. Leopold is more bullish than me about the commercial prospects for Enterprise AI ‘co-pilots’:

“For example, there are around 350 million paid subscribers to Microsoft Office—could you get a third of these to be willing to pay $100/month for an AI add-on? For an average worker, that’s only a few hours a month of productivity gained; models powerful enough to make that justifiable seem very doable in the next couple years.”

The 🐿️ struggles to see Microsoft adding $142bn per annum of MS Office co-pilot seat subscriptions to their revenue line. Certainly not for me unless ‘Samantha’ is going to be whispering into my Air Pods!

However, the math behind the ‘build capex’ to support AGI development is set out in ways that are super clear to this rodent. Compute scale collides with physical world requirements pretty rapidly.

Translating the progress roadmap into hardware requirements sees chip requirements exceed TSMC’s current wafer production capacity by 2028. And judging by the feedback from Computex 2024 in Taipei last week, Sam Altman is not building his $7trn worth of AI chip fabs any time soon!

“Sam Altman, he’s just too aggressive, too aggressive for me to believe.” TSMC chair and CEO, CC Wei.

Then we must deal with the energy requirements. The numbers on extra power demand have been well covered elsewhere (hunt down a copy of Chase Taylor’s ‘Data and Energy’ deck if you can). We are rapidly measuring required demand in terms of multiples of Hoover Dam hydro projects and of nuclear reactors.

The last third of the book covers societal, national security and geopolitical risks associated with AI progress. Scenarios in which AI’s transition from ‘neat’ chatbots and co-pilots to ‘superintelligence’ takes place without the proper guide rails, checks and balances are undoubtedly problematic for us all.

Important stuff. We cannot afford to mess this up. I have been having nightmares about paperclip factories since reading Nick Bostrom’s book 10 years ago! I do strongly agree with Leopold’s final remarks on AGI realism and the narcissistic ‘e/accs’ of Silicon Valley. They merit reproducing in full:

“This is all much to contemplate—and many cannot. “Deep learning is hitting a wall!” they proclaim, every year. It’s just another tech boom, the pundits say confidently. But even among those at the SF-epicenter, the discourse has become polarized between two fundamentally unserious rallying cries.

On the one end there are the doomers. They have been obsessing over AGI for many years; I give them a lot of credit for their prescience. But their thinking has become ossified, untethered from the empirical realities of deep learning, their proposals naive and unworkable, and they fail to engage with the very real authoritarian threat. Rabid claims of 99% odds of doom, calls to indefinitely pause AI—they are clearly not the way.

On the other end are the e/accs. Narrowly, they have some good points: AI progress must continue. But beneath their shallow Twitter shitposting, they are a sham; dilettantes who just want to build their wrapper startups rather than stare AGI in the face. They claim to be ardent defenders of American freedom, but can’t resist the siren song of unsavory dictators’ cash. In truth, they are real stagnationists. In their attempt to deny the risks, they deny AGI; essentially, all we’ll get is cool chatbots, which surely aren’t dangerous. (That’s some underwhelming accelerationism in my book.)

But as I see it, the smartest people in the space have converged on a different perspective, a third way, one I will dub AGI Realism.”

But where does that leave us with markets? AI leaders like Nvidia are now priced for perfection. The considerable impediments that will enable the AI revolution to grow commercially into current valuation multiples are being ignored by the market. For now. Investors seem supportive of the significant investments being made by the hyper-scalers in the AI ‘arms race’. Again, for now.

To fade the momentum of the likes of The Highlander™ directly is a high-risk strategy. The 🐿️ is happy to take the view that the value of the entire US electric utility sector has got ahead of itself (see Fading the Utes). However, for those looking to fade Jensen’s stock split tonight, I wish you good fortune. A broader mix of investment themes in this market would be welcome for sure!

The 🐿️ will be watching with his popcorn, hoping that the AI bot that is currently scraping this note for posterity does not decide to take offence and send Arnie back in time to exact some retribution!

That’s all for front section this week. In Section 2 (for paid subscribers), we wonder whether or not the ‘go woke, go broke’ crew are going to lose their shirts trading their politics (again)!

Remember in March when the obituaries were being written for Alphabet GOOG 0.00%↑ after the botched launch of their ‘woke’ chatbot, Gemini? So does the 🐿️! 😉

Are they making the same mistake in writing off Mexico, one of the best performing emerging markets of recent years, “because they have elected a communist as President”? We believe there is a more nuanced take! In other words, ‘it AI-n’t necessarily so!’.

In preparation for that, let Oscar Peterson, the "Maharaja of the keyboard" explain.